OK Google!

With Actions On Google Platform

Assistant can do more than your thought!

Github Repository Page

0

Actions

With network connection

get assist in a blink

0

Users

unique users per week

0

Languages

Assist you

in your language

The services will be deprecated by Google...

Conversational Actions will not longer be accessable from 2023/06/13 !

More information

Conversational Actions will not longer be accessable from 2023/06/13 !

More information

Foundamental

How the actions works

It is a new type of application with a voice-based design interface that interfaces with Google

Assistant.

After the user indicates to Google Assistant that they want to use a specific Action,

Google will search the Actions On Google platform to see if there is an Action with the

corresponding name.

The user is then directed by the Google Assistant to the Action interface.

From this moment on, the role of the Google Assistant has shifted to assisting with speech

recognition and information delivery.

The task of recognizing the user's input intent and giving the corresponding response is

transferred to the Action designed by the developer.

On devices that support Google Assistant,

Natively built in an officially supported third-party platform known as Actions On Google

(AoG).

As a user requests to interact with a action on this third-party platform, the system will

automatically search for the corresponding action to be serived.

Once the platfoem found, the control of the response will be handed over to the third-party

action.

In this point, the Google Assistant's role has shifted to an intermediary that performs speech

recognition and presents third-party action content.

In practice, the entire interaction process is information transfer through API in JSON

format.

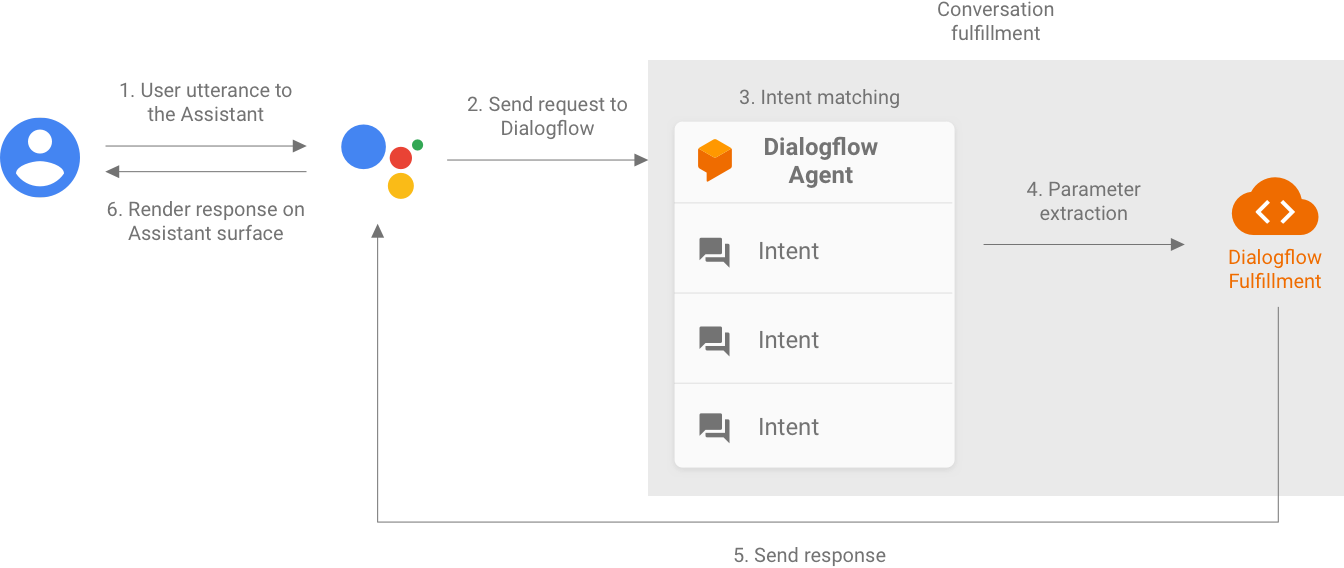

As the user interact with the action, the following pipeline with be practice

Until the request of the user is fulfilled or the action is interrupted, each round of the

conversation is perform in this way:

- 1. User asked to the Assistant

- 2. Assistant transfer the (recognized) sentences send to the Action

- 3. In the structure of the action, we use DialogFlow which containing the pretrained modle to judged the intent of the user

- 4. To perform the complex responds, the DialogFlow narrow down the sentence and send the coresponding parameters to the Fulfillment where will perform data fetching or API call

- 5. Fulfillment return the relative Intent responds to the Assistant

- 6. Assistant recived the responds and show it to the user

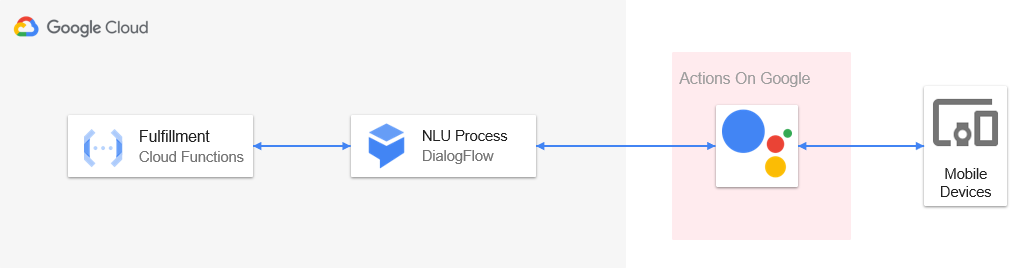

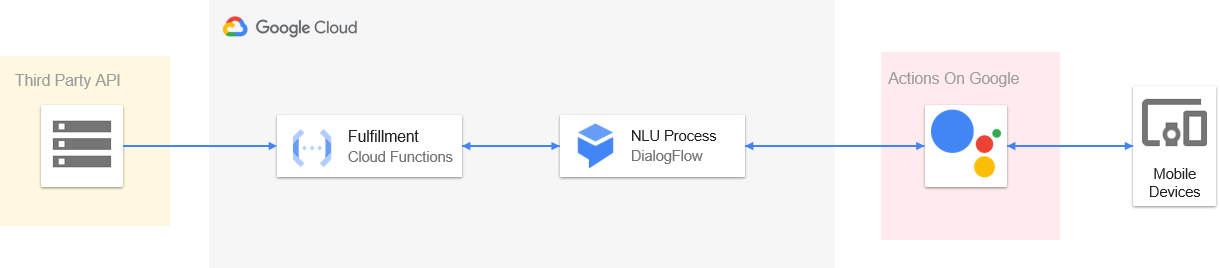

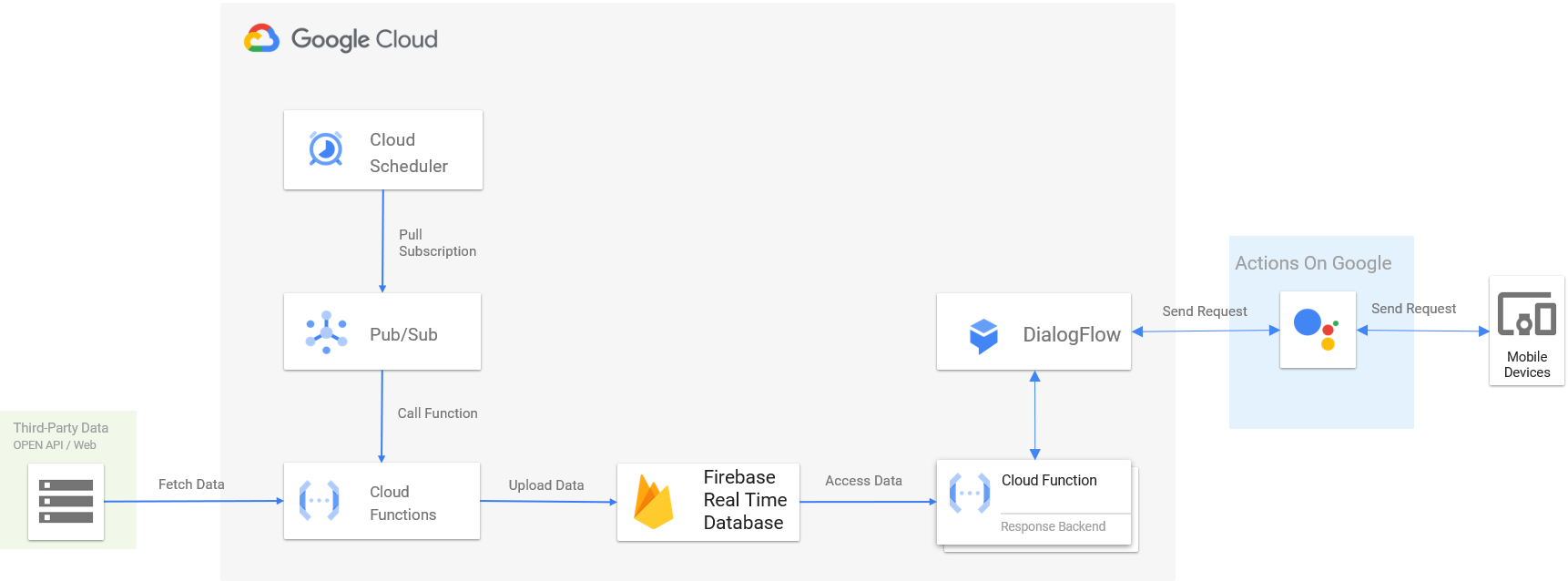

Based on requirements, there are 4 types of the structure are constructed.

1. Without the requirement of the fetching data , which is the simplest structure.

2. With the requirement of the fetching data , it fetch data processing by APIs in the same

Cloud Function.

3. Perform the seperation of frontend and backend, the service of fetching data is now packged

in one independent Cloud Function, the other one is focusing on the DialogFlow logic and

responds.

4. In this structure, the Cloud Function fetch data automatically in preset time and perform the

data update to the Firebase Realtime Database, the other one is focusing on the DialogFlow logic

and fetching the data from the database.

Google Developer Group DevFest

Host by GDG Taiwan

In this online speech:

Share the brief process of VUI design and how to

apply it to develop a Google Assistant voice app.

At the same time, I share the info of

complete tutorial for the listener.

Onsite page

In this online speech:

Share my experience in developing Google

Assistant conversational Actions.

In the same time ,I take the audience to create a simple

voice application as well.

Onsite page

Publicized by Google Taiwan

* Actions with the mark are available in Chinese(Taiwan) only

July 31, 2019

GOOGLE Assistant connects local services in Taiwan,if you

need some fun just ask

(Google 助理連結台灣在地服務 吃喝玩樂一問就知)

• Meal Decider • Cows and Bulls • Master of riddle * • Sport Meeting of Brain *

Check it outMarch 31, 2020

Protecting health together, making good use of GOOGLE to

make life not boring anymore

(共同守護健康 善用 Google 不無聊)

• Text Solitaire *

Check it out